News

-

I am the organizer of Open SuperX SLAM for real-time robotics perception and Tartan SLAM series to invite speakers to share insights. Feel free to reach out if you are interested in my work and want to collaborate :-).

- Super Odometry is accepted by Science Robotics and selected as a top feature article. Feel free to check here

- I was invited to give a talk on IMU Foundation Model at Apple Curpertino, USA 2025.

- I was invited as a author to write a chapter in SLAM Handbook "From Localization and Mapping to Spatial Intelligence", USA 2025

- 1 paper is accepted at ICLR 2025 and 2 papers are accepted at CVPR 2025 and 5 papers are accepted at ICRA2025.

- I'm the organizer of ICCV23 SLAM Challenge and Workshop on Robot Learning and SLAM, at ICCV 2023.

- I was the organizer of Tartan SLAM series at CMU, Robotics Institute, 2022.

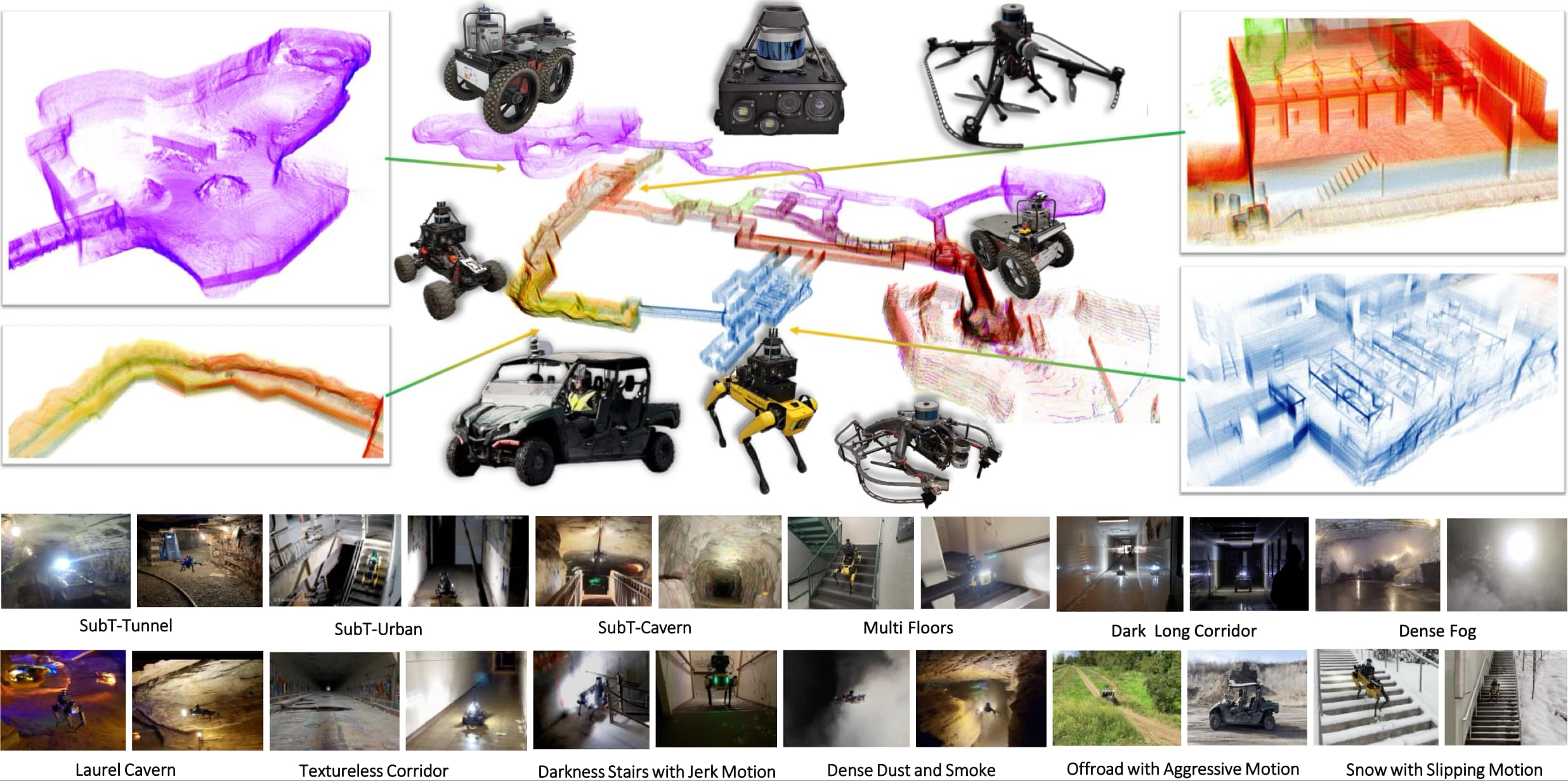

- I developed robotic state estimation and mapping algorithms, which enabled a team of robots to explore kilometer-scale underground environments in the DARPA Subterranean Challenge. I helped the team won No.1 in DARPA Tunnel Challenge,No.2 in DARPA Urban Challenge and No.4 in DARPA Final Challenge, USA 2021.

Click to show older news ...

Research Highlights

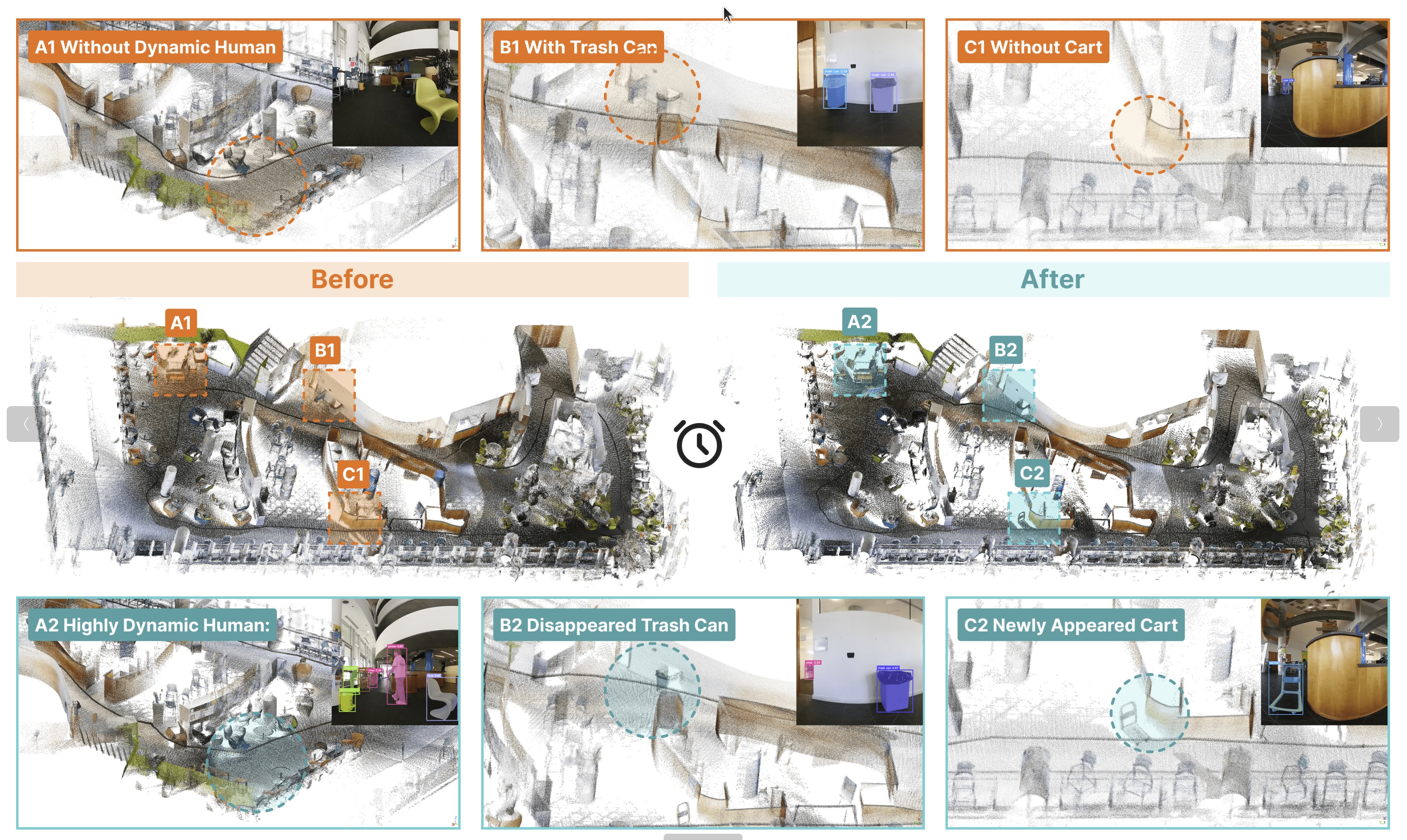

How can we enable robots to perceive, adapt, and understand their surroundings like

humans, in real-time and under uncertainty? Just as humans rely on vision to navigate

complex environments, robots need robust and intelligent perception systems, "eyes" that can

endure sensor degradation, adapt to changing conditions, and recover from failure. However,

today's visual systems are fragile, easily disrupted by occlusion, lighting variation, motion

blur, or dynamic scenes. A single sensor dropout or unexpected environmental change can

trigger cascading errors, severely compromising autonomy. My research goal is to develop resilient and intelligent visual perception systems—building the "robust eyes" robots need to thrive in the real world.

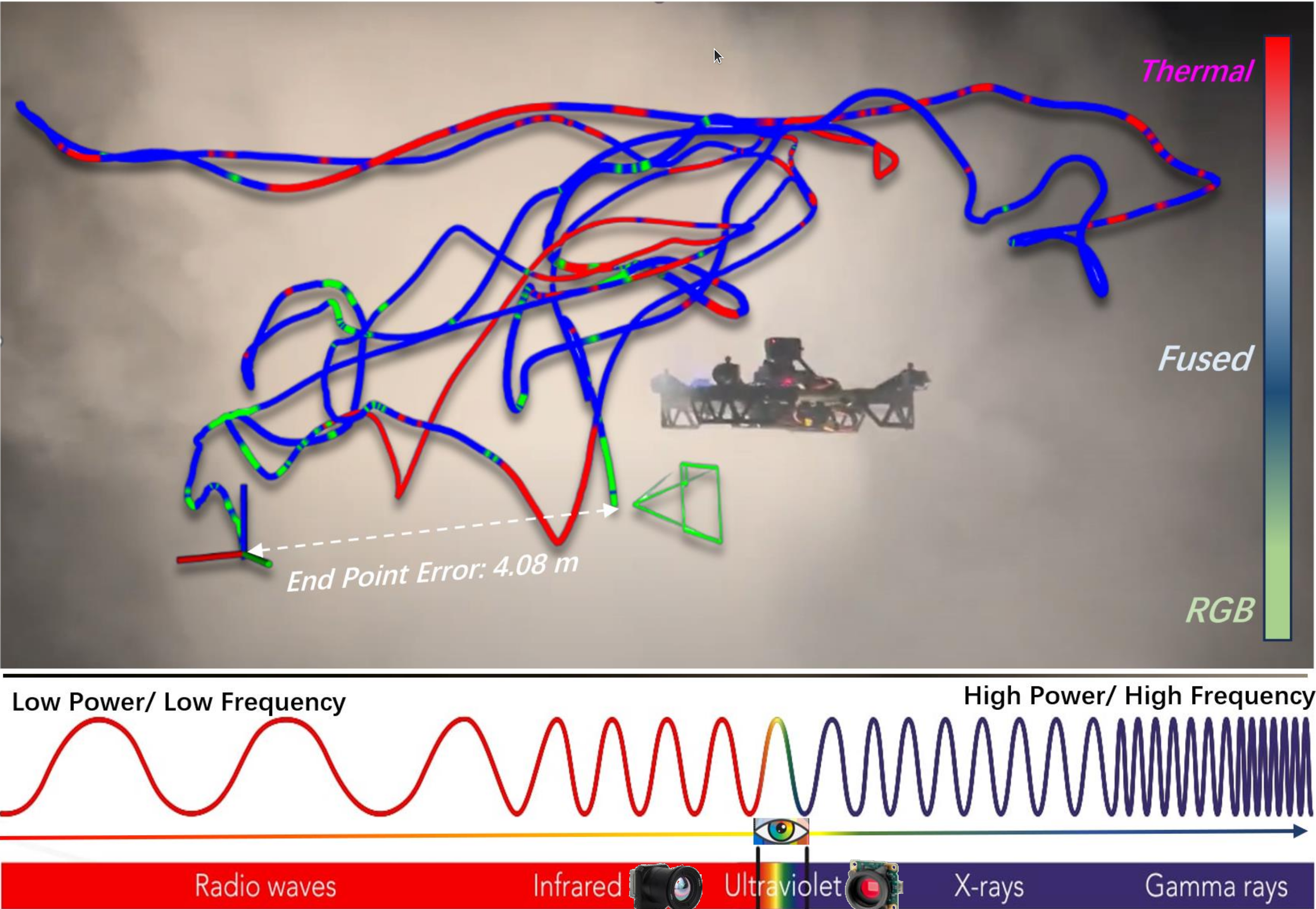

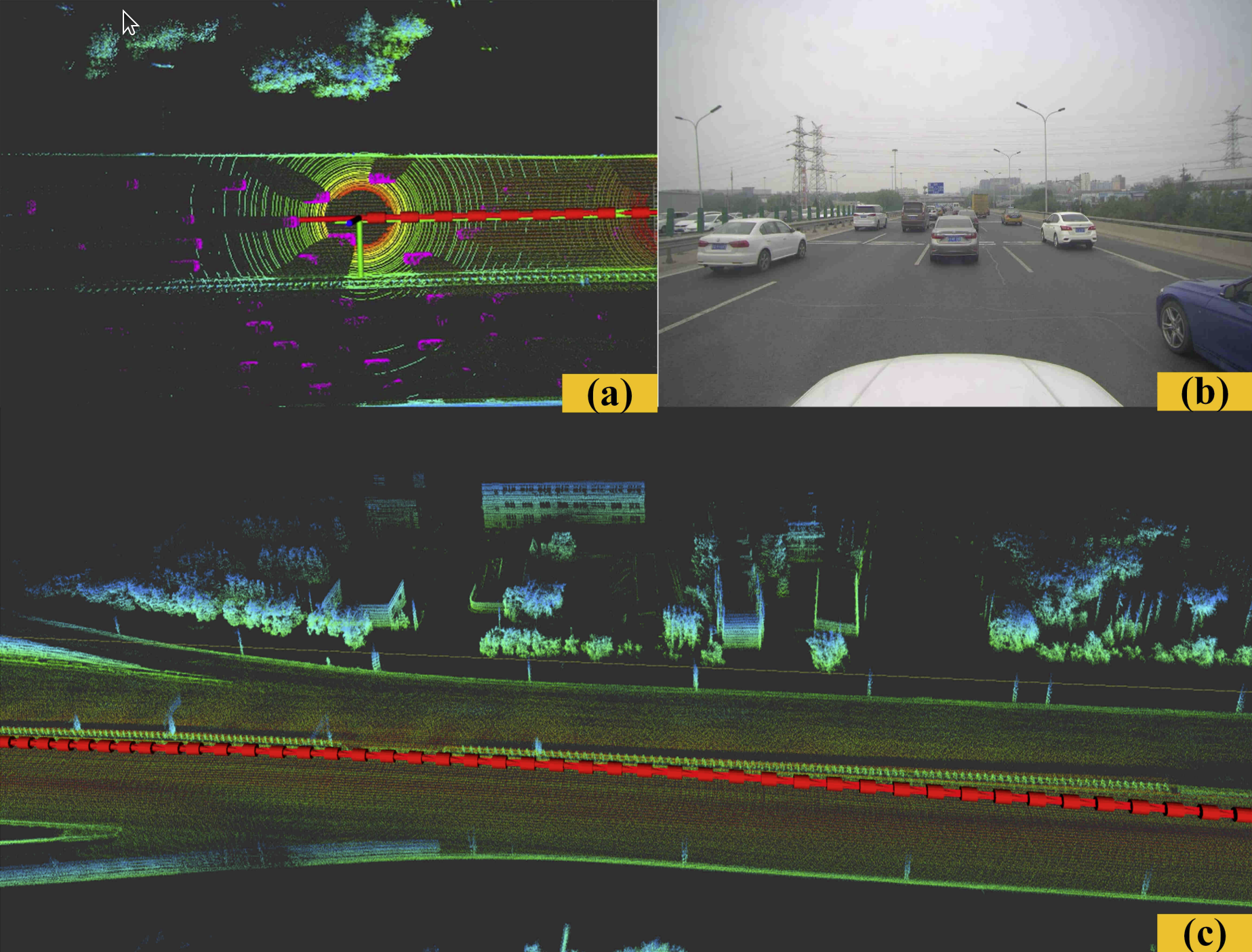

Robust Spatial Perception: Real-world Data Collection for Spatial Intelligence

How to achieve robust robot perception for anywhere and anytime?

How to capture high quality and divere data to train the model from real-world ( Requires Three Capabilities)?

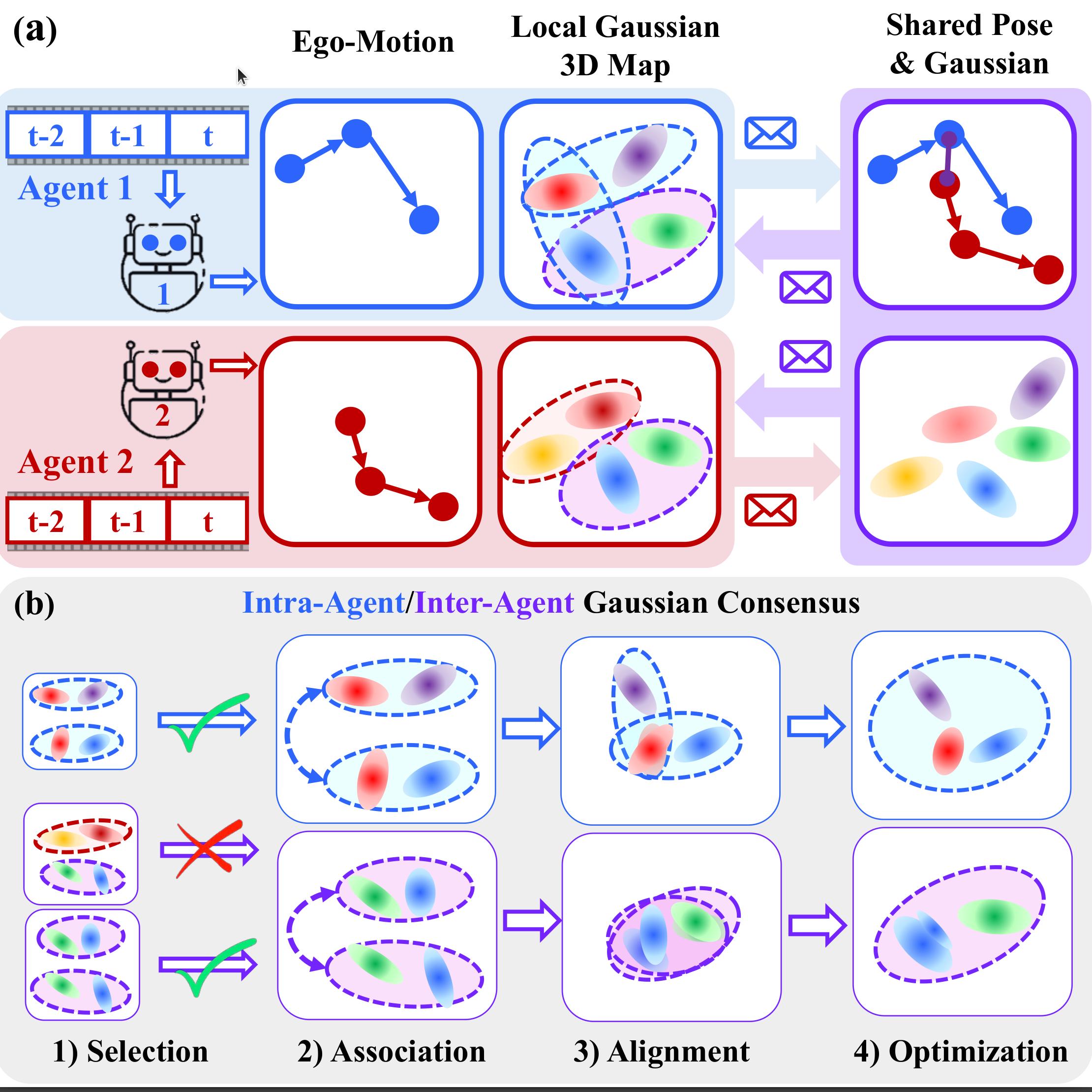

Show/Hide Work on Representations

-

Generalization: How to achieve a unified sensor fusion for different modalities and robots?

-

-

Robust Spatial Perception: Spatial Intelligence Training from Real-world Data

How can we efficiently learn spatial and semantic knowlege from above real-world data ?

How can we leverage the strength of data-driven methods (eg: foundation models) and optimization methods?

Show/Hide Work on Algorithms